NSX ALB as Control Plane Endpoint Provider

When integrated with Tanzu Kubernetes Grid, NSX ALB can provide endpoint VIP to the clusters and perform load-balancing on the control plane nodes of the cluster. This feature also lets you customize the API server port and endpoints VIP network of the cluster.

The following table provides a comparison on the features supported on NSX ALB and Kube-Vip:

| NSX ALB | Kube-Vip | |

|---|---|---|

| Cluster endpoint VIP for IPAM | Provided by NSX ALB |

Managed by the customer manually |

| Load Balancing for the control plane nodes | Traffic will be load balanced to all control plane nodes |

Traffic will only be routed to the leader control plane node |

| Port for the API Server | Any port (Default is 6443) |

Any port (Default is 6443) |

Configure NSX ALB as the Cluster Endpoint VIP provider

To configure NSX ALB as the cluster endpoint VIP provider in a management cluster:

-

Create a management cluster configuration YAML file, and add the following field in the file:

AVI_CONTROL_PLANE_HA_PROVIDER: true -

If you want to use a static IP address for the endpoint VIP, add the following field in the file:

VSPHERE_CONTROL_PLANE_ENDPOINT: <IP-ADDRESS>Ensure that the VIP is present in the static IP pool specified in your VIP network.

-

If you want to specify a port for the API server, add the following field in the file:

AVI_CONTROL_PLANE_HA_PROVIDER: true CLUSTER_API_SERVER_PORT: <PORT-NUMBER>Note

The management cluster and its workload clusters will use this port to connect to the API server.

- Create the management cluster by using the

tanzu management-cluster createcommand.

NSX ALB is now configured as the endpoint VIP provider for the management cluster.

To configure NSX ALB as the cluster endpoint VIP provider in a workload cluster:

-

create a workload cluster configuration YAML file, and add the following field:

AVI_CONTROL_PLANE_HA_PROVIDER: true -

If you want to use a static IP address for the endpoint VIP, add the following field in the file:

AVI_CONTROL_PLANE_HA_PROVIDER: true VSPHERE_CONTROL_PLANE_ENDPOINT: <IP-ADDRESS>Ensure that the VIP is present in the static IP pool specified in your VIP network.

-

Deploy the workload cluster by using the

tanzu cluster createcommand.

NSX ALB is now configured as the endpoint VIP provider for the workload cluster .

Separate VIP Networks for Cluster Endpoints and Workload Services

If you want to use this feature on NSX ALB Essentials Edition, ensure that the network that you are using does not have any firewalls or network policies configured on it. If you have configured firewalls or network policies on the network, deploy the NSX ALB Enterprise Edition with the auto-gateway feature to use this feature.

When using NSX ALB to provide cluster endpoint VIP, you can customize the VIP network for your cluster endpoint and workload services (load balancer and ingress) external IPs. You can separate them into different VIP networks to enhance security and fulfil other network topology requirements.

Configure Separate VIP Networks for the Management Cluster and the Workload Clusters

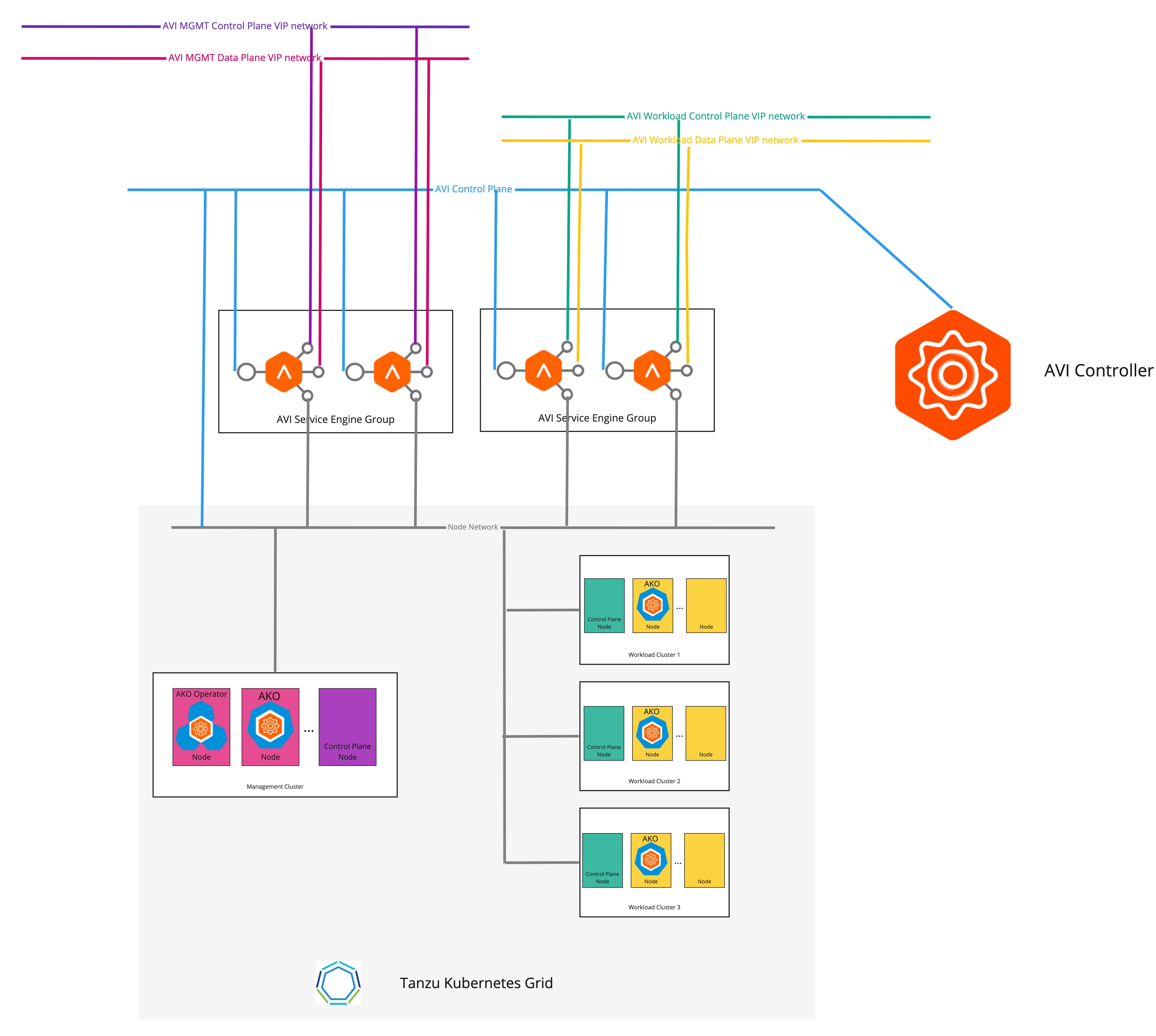

The following diagram describes the network topology when separate VIP networks are configured for the management Cluster and the workload clusters:

To configure separate the VIP networks for the management cluster and the workload clusters:

-

Create a management cluster configuration YAML file, and add the following fields in the file:

AVI_CONTROL_PLANE_HA_PROVIDER: true # Network used to place workload clusters' services external IPs (load balancer & ingress services) AVI_DATA_NETWORK: AVI_DATA_NETWORK_CIDR: # Network used to place workload clusters' endpoint VIPs AVI_CONTROL_PLANE_NETWORK: AVI_CONTROL_PLANE_NETWORK_CIDR: # Network used to place management clusters' services external IPs (load balancer & ingress services) AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_NAME: AVI_MANAGEMENT_CLUSTER_VIP_NETWORK_CIDR: # Network used to place management clusters' endpoint VIPs AVI_MANAGEMENT_CLUSTER_CONTROL_PLANE_VIP_NETWORK_NAME: AVI_MANAGEMENT_CLUSTER_CONTROL_PLANE_VIP_NETWORK_CIDR:For more information on creating a management cluster configuration file, see Create a Management Cluster Configuration File.

-

Create the management cluster by using the

tanzu management-cluster createcommand.

The endpoint VIP of the cluster and the external IP of the load balancer service are now placed in different networks.

Configure Separate VIP Networks for Different Workload Clusters

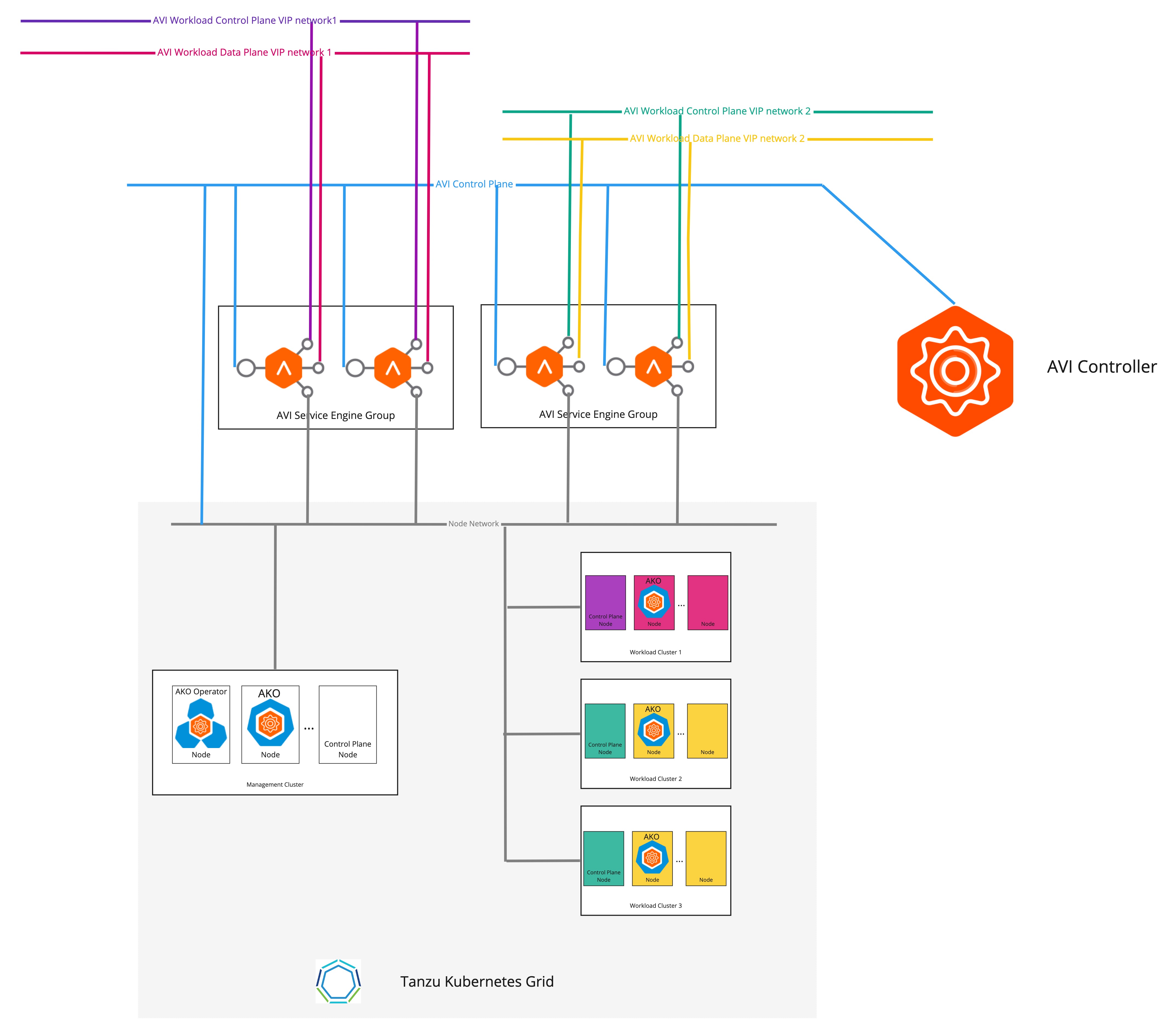

The following diagram describes the network topology when separate VIP networks are configured for different workload clusters.

To configure separate the VIP networks for different workload clusters:

-

Create a

AKODeploymentConfigCR object as shown in the following example:apiVersion: networking.tkg.tanzu.vmware.com/v1alpha1 kind: AKODeploymentConfig metadata: name: install-ako-for-dev-cluster spec: adminCredentialRef: name: avi-controller-credentials namespace: tkg-system-networking certificateAuthorityRef: name: avi-controller-ca namespace: tkg-system-networking controller: 1.1.1.1 cloudName: Default-Cloud serviceEngineGroup: Default-Group clusterSelector: # match workload clusters with dev-cluster: "true" label matchLabels: dev-cluster: "true" controlPlaneNetwork: # clusters' endpoint VIP come from this VIP network cidr: 10.10.0.0/16 name: avi-dev-cp-network dataNetwork: # clusters' services external IP come from this VIP network cidr: 20.20.0.0/16 name: avi-dev-dp-network -

Apply the changes on the management cluster:

kubectl --context=MGMT-CLUSTER-CONTEXT apply -f <FILE-NAME> -

In the workload cluster configuration YAML file, add the required fields as shown in the following example:

AVI_CONTROL_PLANE_HA_PROVIDER: true AVI_LABELS: '{"dev-cluster": "true"}' -

Create the workload cluster by using the

tanzu cluster createcommand.

The endpoint VIP of the clusters and the external IP of the load balancer service are now placed in different networks.